Submitted by LibRaw on

Let's take a close look at a dual-pixel raw file from Canon 5D Mark IV, which can be downloaded here, using RawDigger 1.2.13.

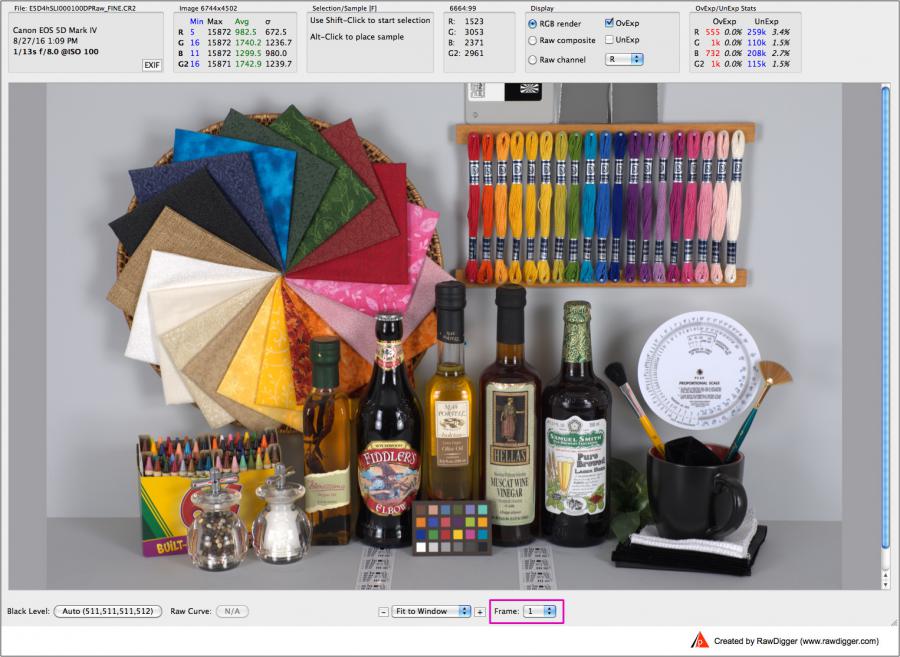

The dual-pixel raw contains 2 raw data sets; we will be calling them main subframe (Frame 1 in RawDigger)...

... and auxiliary subframe (Frame 2 in RawDigger).

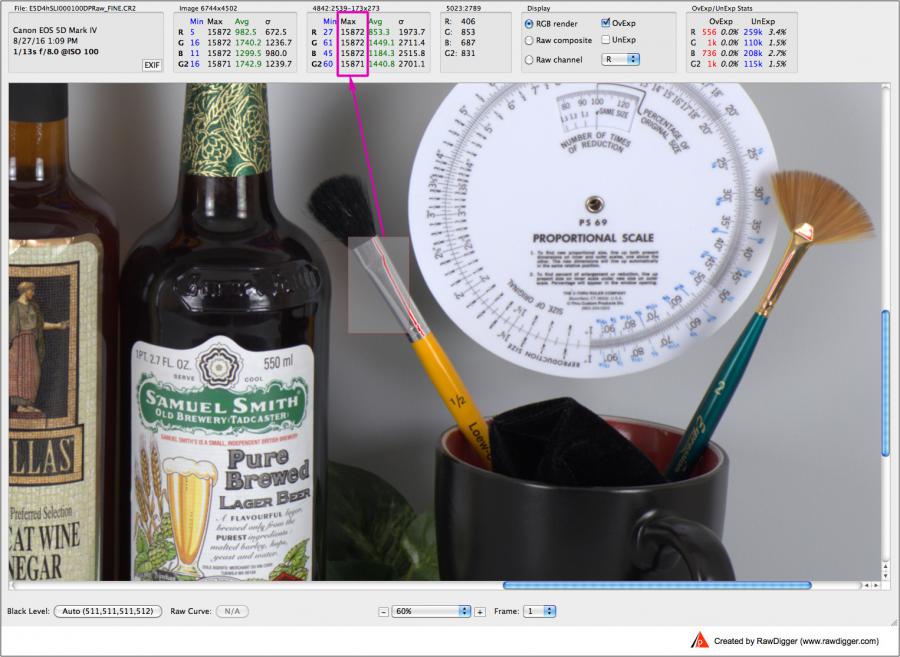

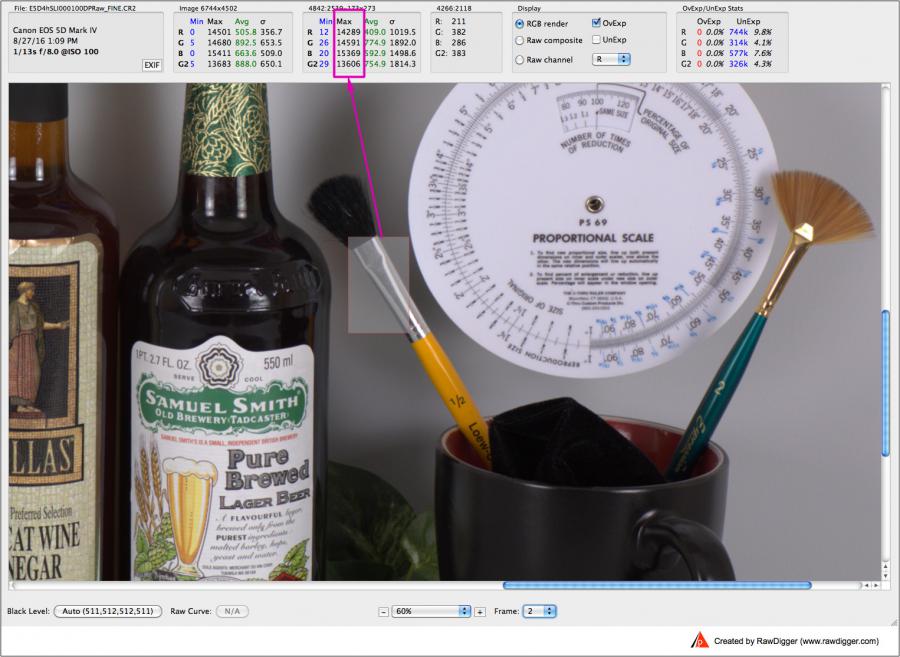

We will start by inspecting the main subframe. For this shot, it contains specular highlights that are blown out. Below is a screenshot with such a clipped area sampled - see the grey rectangle marking the area selected on the left brush. The red overlay over the specular highlight indicates the blown out pixels. The statistics for this selection is on the top panel of Raw Digger, outlined in purple.

All the channels reach their maximums: 15872 = (16383 - 511), where 511 is the black level for Red, Green, and Blue channels (the black levels are indicated in the lower left corner of the screen shot); while the second Green channel (G2) is reaching 15871 (because its black level is 512, higher than the others by 1 data number / DN).

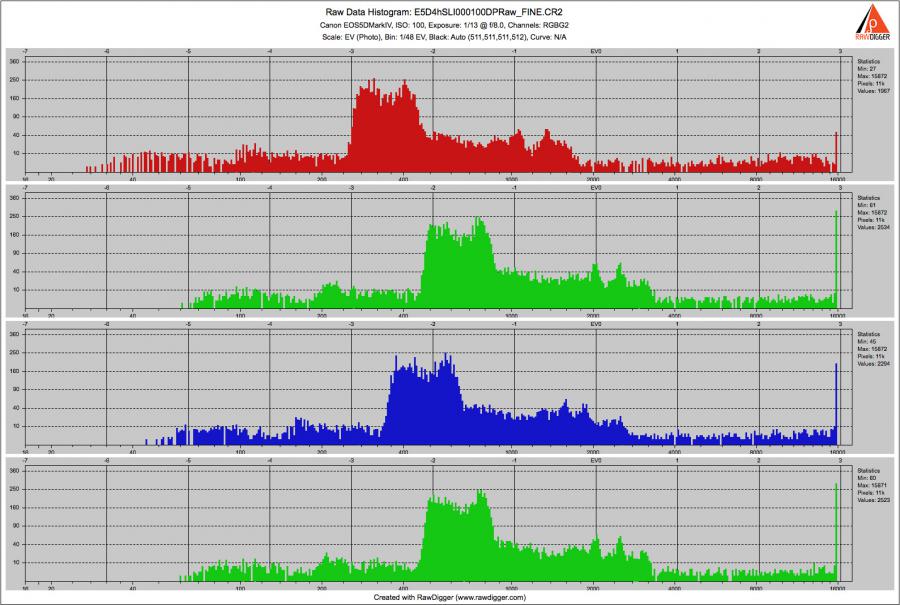

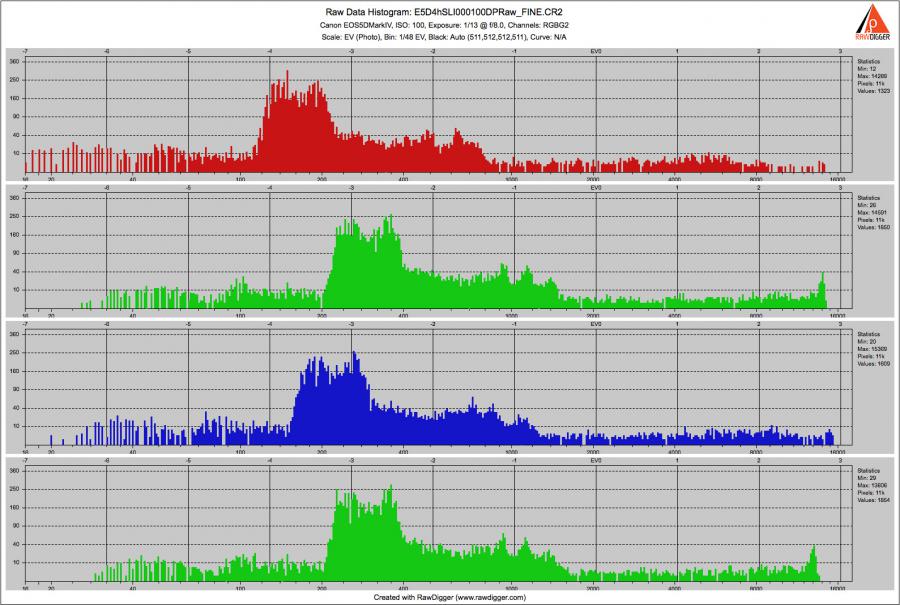

The histogram for this selection indicates, as expected, that there is a peak in extreme highlights in all 4 channels.

This is all business as usual. All 4 channels reaching maximum prohibit any highlight reconstruction.

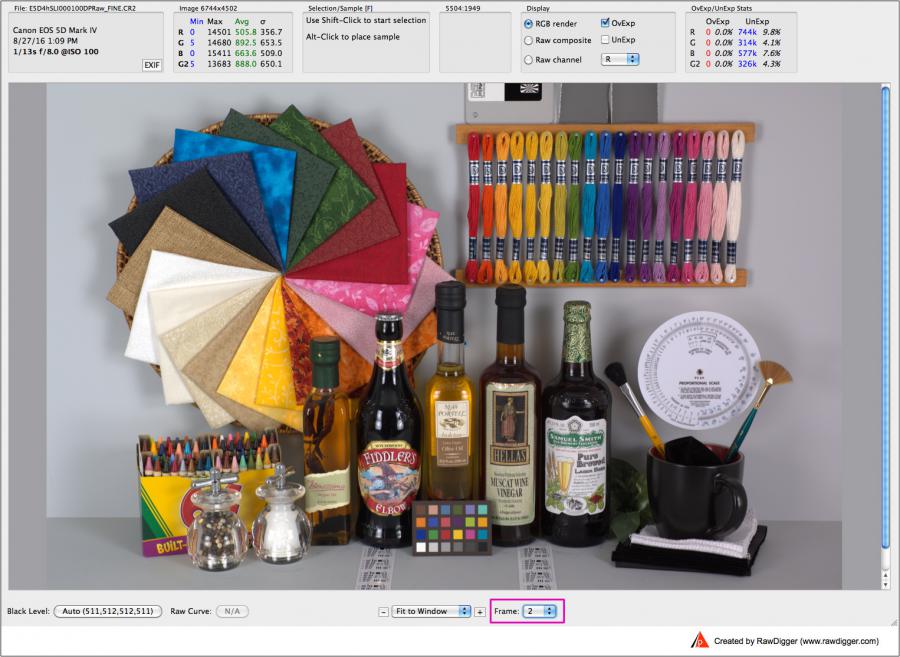

Now let's inspect the second, auxiliary subframe. The maximum values for the same selection are now lower and there is no Overexposure indication - no red overlay over the highlight. Also, the per channel maximums now differ from one another.

We can see the same on the histogram: no sharp peaks hitting the right wall. Thus, the auxiliary subframe is not hard-clipped.

The highlight are preserved in the auxiliary subframe and they are clipped in the main subframe. The full 14 bit range is used for both the main and auxiliary subframes, and there are no voids in the histogram that would indicate digital manipulation to fill the range.

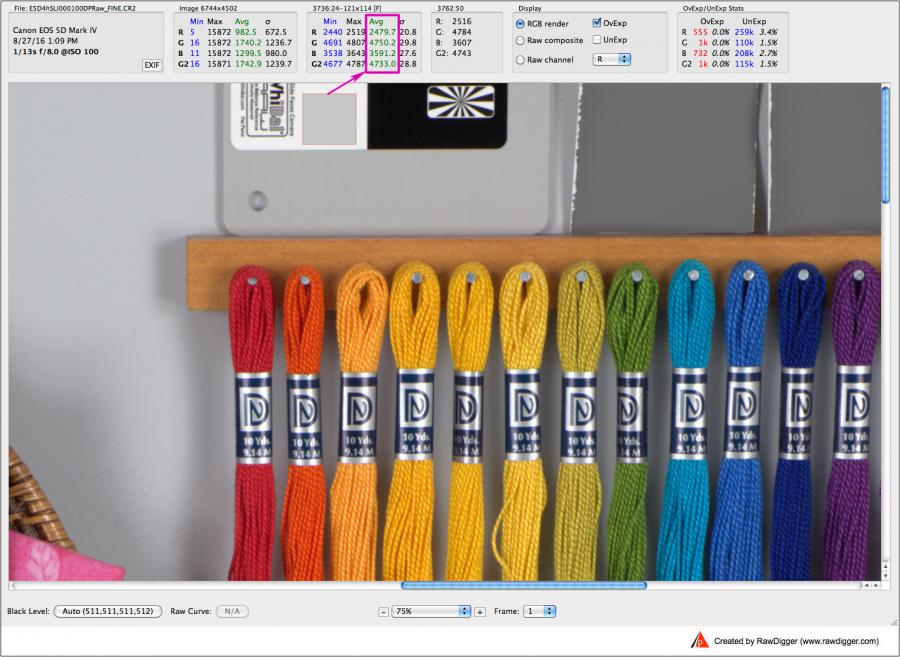

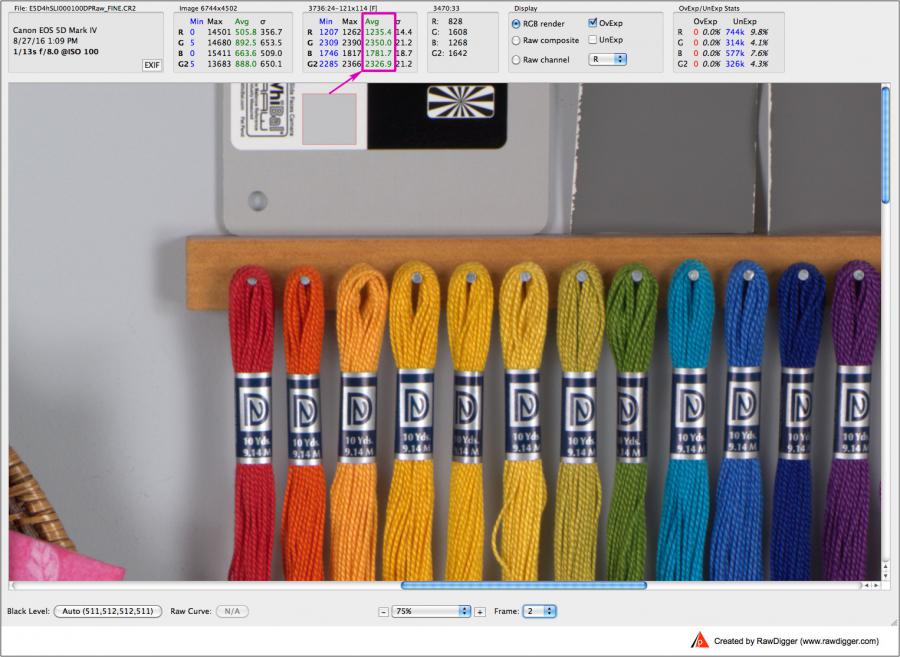

In other words, effectively, the auxiliary subframe is "underexposed by 1 stop", compared to the main subframe. For example, sampling the gray WhiBal card in the shot, we can see that the values in the main subframe

are nearly 2x the values in the auxiliary subframe:

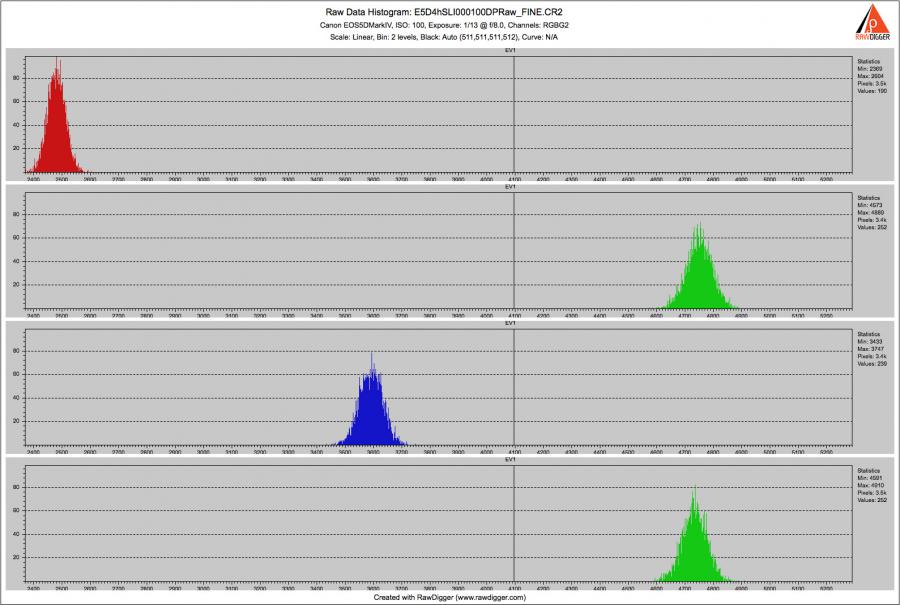

Also see the histogram for the sampled area of the main frame:

and the same area of the auxiliary frame:

Channel averages for the selection on the main subframe vs. the auxiliary subframe:

| Channel | Main | Auxiliary |

| Red | 2481.7 | 1236.1 |

| Green | 4749.6 | 2349.9 |

| Blue | 3591.7 | 1781.7 |

| Green2 | 4733.3 | 2327.6 |

This confirms that the difference between the main and auxiliary subframes is nearly 2x, or 1 stop, and that the auxiliary subframe can be used for highlight recovery (again, an additional 1 stop of highlights is preserved in the auxiliary subframe while being clipped in the main subframe), effectively providing one more stop of headroom in the highlights; and the dual-pixel raw file for this camera contains 15 bits of raw data, if you consider main and auxiliary subframes together.

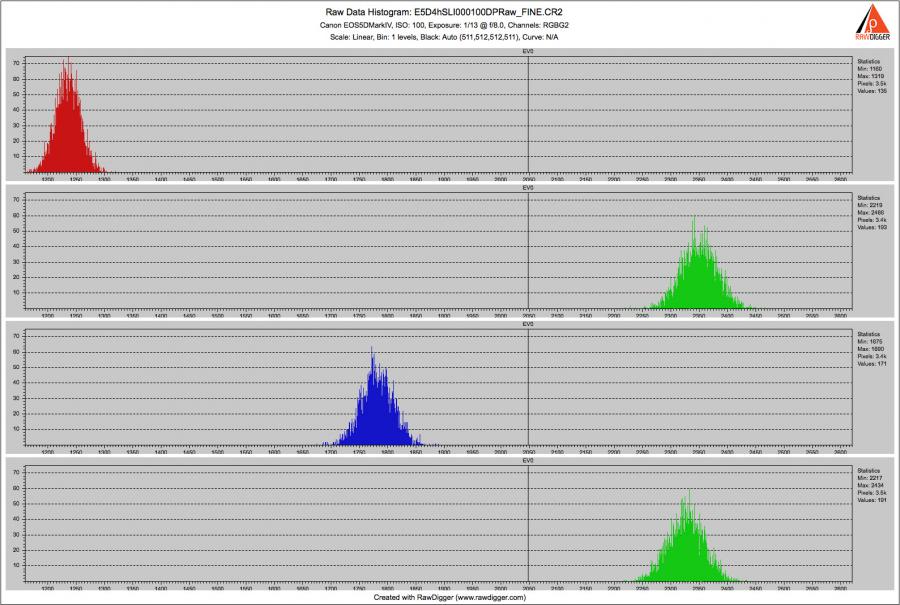

Here is how you can check that the main subframe of the dual-pixel raw is the same as a regular raw. Compare two shots in Fine mode - taking the main frame from Dual Pixel raw (the left part of the picture below - the dual pixel raw and its histogram) and the "regular" Fine raw (the right part of the picture below - the "regular" raw and its histogram).

As you can see, they are identical within the margin of error of the experiment.

Our sincerest gratitude to the Imaging Resource Team for their continuous work and making raw samples available to the community.

PS Low ISO raw files from other recent Canon camera models do not reach the maximum number, 16383, possible with 14-bit data; Canon 5D Mark IV does. So, the main subframe may be formed by adding the data numbers from both subpixels and clipping the result to 16383, while the auxiliary subframe contains the data for only one set of subpixels, and at base ISO it seems to be "full-well limited".

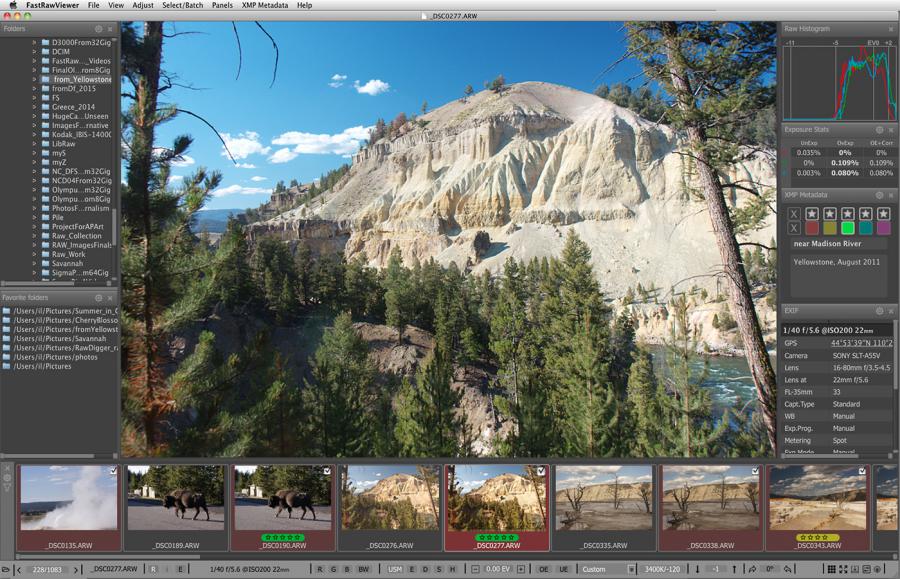

The Unique Essential Workflow Tool

for Every RAW Shooter

FastRawViewer is a must have; it's all you need for extremely fast and reliable culling, direct presentation, as well as for speeding up of the conversion stage of any amounts of any RAW images of every format.

Now with Grid Mode View, Select/Deselect and Multiple Files operations, Screen Sharpening, Highlight Inspection and more.

23 Comments

Wouldn't this fail to

Submitted by CarVac (not verified) on

Wouldn't this fail to properly recover highlights in blurred areas, since the content of the two channels would be significantly different?

I had hoped they would just record each channel individually to prevent clipping issues like this.

> Wouldn't this fail to

Submitted by LibRaw on

> Wouldn't this fail to properly recover highlights in blurred areas, since the content of the two channels would be significantly different?

It should not, as highlights seldom are highly textured.

> I had hoped they would just record each channel individually to prevent clipping issues like this.

That would open 2 problems. One is that all third-party converters will be in trouble. Second, from the point of view of design, quality control, repairs, having "regular" cr2 and the main frame in a dual-pixel cr2 same saves a lot of headache

Also, this makes it seem like

Submitted by CarVac (not verified) on

Also, this makes it seem like a true ISO 50 is possible, but based on the samples, it's not actually achieved?

I think in a way auxiliary

Submitted by LibRaw on

I think in a way auxiliary subframe is sort of ISO 50 equivalent. Much of the last stop in highlights usablity depends on the non-linearity in highlights, and how software uses the photon transfer curve to linearize it. If you look at the golden label and golden brush highlight areas which are blown out on the main subframe, they have very little clipping and reasonable colour in the auxiliary subframe, so for now it looks like linearity is pretty good; but more controlled shots are needed to get to the actual numbers.

So you suspect that the

Submitted by CarVac (not verified) on

So you suspect that the linearity might be why they didn't implement a true ISO 50?

I was wishing they would simply average the two sides instead of summing them, but when I look at the raw files the ISO 50 ones are indeed recorded twice as bright as ISO 100.

> you suspect that the

Submitted by LibRaw on

> you suspect that the linearity might be why they didn't implement a true ISO 50?

One of the possibilities, yes.

> I was wishing they would simply average the two sides instead of summing them

That's not exactly what you want to do in signal processing. The signals are slightly different, you can't just average them, you need to normalize one to the other first, and that opens a way to pattern noise / banding. Canon suffered a lot of badmouthing for banding already to risk that.

Slight difference, however, will not cause problems when taking highlights from the last stop of the auxiliary subframe because it is indeed so subtle that does not make perceivable difference, especially after demosaicking.

I see, so while summing (or

Submitted by CarVac (not verified) on

I see, so while summing (or averaging) in most of the pixels' response is close enough over the majority of the dynamic range, when you run up against the highlight limits, pixel uniformity might suffer and so that may be why they don't let us access it.

Please have a look at http:/

Submitted by LibRaw on

Please have a look at http://www.kamerabild.se/sites/kamerabild.se/files/_91A0045.CR2

You will see that on the auxilary subframe the sky is far from being clipped.

Main subframe:

Auxiliary subframe:

Question, where would the

Submitted by Jack Hogan (not verified) on

Question, where would the additional highlight information of the half-pixel frame be stored? Half the pixel, half the FWC, right? E.g. saturation of full pixel = 50ke-, saturation of half pixel = 25Ke-. In other words they both saturate at the same exposure? So where does the highlight headroom come from?

Jack

In fact: half-pixel max level

Submitted by lexa on

In fact: half-pixel max level is the same as in 'full pixel' (16k minus bias).

And half-pixel values are ~1 stop below full pixels (not exactly because of different 'angle', but close enough for HL recovery)

Yes, in terms of DN since the

Submitted by Jack Hogan (not verified) on

Yes, in terms of DN since the gain is obviously maintained the same. But the saturation in FWC/micron^2 is a fixed characteristic of the pixel. So in e-, with the same exposure the half pixel saturates at about half the e- level of the full pixel because it has about half the area.

Look at the (SNR)^2 of the main and half-pixel frame G channel in the screen capture above: same exposure = signal of 25409e- for the full pixel vs 12287e- for the half pixel.

Jack

It looks like A+B value is

Submitted by lexa on

It looks like A+B value is not saturation limited, but ADC range limited

Unless I am missing something

Submitted by Jack Hogan (not verified) on

Unless I am missing something, it makes no difference.

Jack

Thanks Alexa, I now see what

Submitted by Jack Hogan (not verified) on

Thanks Alexa, I now see what you mean after having read the other related thread. So when you push exposure beyond clipping the main clips but the auxiliary does not, showing us highlight tones beyond what Canon considers 'safe' and wanted us to see. I wonder how non-linear it is and whether it can be rectified. It'd be fun to do PTC analysis on it.

Another question: if it indeed were A+B added digitally then we would expect the read noise of A+B to be higher than the noise of A or B in isolation. Since it isn't wouldn't this point to the fact that to create the main frame, A and B are combined analogically (binned before the source follower) and only read out once?

Jack

It does not looks like 'A+B

Submitted by lexa on

It does not looks like 'A+B added digitally: black frame noise levels are mostly the same for A+B and B.

We do not have black frames on hands to analyze in depth.

What were they thinking?

Submitted by dgatwood (not verified) on

What were they thinking? They basically encoded (I think) the sum of the two images as the first image, plus one of the two images as the second (that's your one-stop difference). The result is that the auxiliary image takes a lot more space than it actually needs to. It seems to me that they should have combined sum-difference encoding, sign-magnitude encoding, and run-length encoding, as follows:

Sum-difference encoding.

What's important to note, at this point, is that except for the outliers where a pixel's values are very different on both sides (massively out-of-focus areas?), most of your values here should be very small positive or negative numbers, with many pixel values being nearly all zeroes or ones.

It is also important to understand that, assuming you don't have too few bits to work with, this translation is lossless. You can add the resulting parts together and get L+R+L-R = 2L. Shift by one bit, and you have L. Similarly, you can subtract these from each other and L+R-(L-R) = L+R-L+R = 2R. Shift by one bit, and you have R.

Sign-magnitude encoding:

By making the leftmost digits be zero, various compression algorithms work better, including the lossless JPEG that they're already using, I think. But the real benefit of having mostly zeroes comes when you do bitwise run-length encoding. (And the one-bit rotation is irrelevant if you do this.)

Bitwise run-length encoding

I suspect that this relatively small amount of simple bit math would have been enough to reduce the dual-pixel RAW penalty enough to make it practical for everyone to just turn on dual-pixel RAW and leave it on. The CPU impact should be minimal—even my quick, hackish, naïve, non-vectorized implementation of most of this math ran 50 million times in under a second on a modern CPU, so the big processing hit should be tiny, too.

Am I missing something subtle? Are the differences much larger than I think they are? Or did nobody at Canon take Dr. Langdon's data compression course (RIP)? :-)

Looks like Canon prefer

Submitted by lexa on

Looks like Canon prefer compatibility more than flash card space savings

Compatibility with what? It

Submitted by dgatwood (not verified) on

Compatibility with what? It's a new format, and everybody had to implement support for it anyway....

For example, RawDigger works

Submitted by lexa on

For example, RawDigger works with 5Dmk4 DualRaw format without any major modifications (only masked border sizes was added to internal tables).

Pedantically, yes, it can

Submitted by dgatwood (not verified) on

Pedantically, yes, it can decode the fractional image. But that's really not the interesting part of supporting dual-pixel RAW. After all, that secondary image is only the fractional image from one half of each subpixel, which isn't something you would ever want to use by itself as an image. For example, if you look at sufficiently out-of-focus areas, IIRC, you'll see a semicircle where the bokeh ball should be. It looks ridiculous by itself.

The half image is only interesting in the context of software specifically designed to take that data and combine it with the full image in interesting ways. The code required to decode the image is at most a tiny fraction of the code required to support dual-pixel RAW in any *meaningful* way.

Encoding the primary (summed) image in the same way that they've always encoded it makes perfect sense from a compatibility perspective, because that enables RAW decoders to add basic support for the photos with only minimal effort. Encoding the fractional image in the same way? That's just wasting space, and that really adds up. As a result, most users will miss the opportunity to take advantage of this potentially powerful feature. And that's a shame—particularly given that any compatibility issue would be completely moot as long as Canon provided a reference implementation under a permissive license....

The fact they record A+B and

Submitted by Raoul (not verified) on

The fact they record A+B and A and not A+B and A-B is probably because that is what they have directly available from the sensor/ADCS.

They need A+B before the Analog to Digital Converter to get the maximum dynamic range, I guess. Then they also convert one half of the pixels (A) independently. Transferring both A and B would probably take more time (twice the AD conversion) and computing B can be done later (by our computer).

This is likely, as said above, given the highlights are not clipped in A but are in A+B while blank noise levels are the same (according to previous post. I didn't check it)

Raoul

Impact on depth of field

Submitted by Raoul (not verified) on

I was wondering...

If final image is from 2 sub images slightly shifted in focus, does that mean that the final A+B image will have a deeper depth of field than an image from a similar camera without dual pixel raw (even if the DPR recording is disabled or for out-of-camera jpeg) ?

Does it also mean that by changing the mix ratio between A and B at sum time we could change the depth of field afterwards?

Raoul

Each half-pixel sees only

Submitted by lexa on

Each half-pixel sees only half of lens, so effectively it is extra ~1stop of lens aperture closed (so extra DoF).

Add new comment